Parameter-efficinet Fine-tuning

Pre-trained large-language models (LLMs) own a large set of parameters. Although these models achieve strong overall performance, they often struggle with tasks in which the data distribution differs significantly from their training set. Fine-tuning, i.e., the process of updating the parameters of a pre-trained model by training the model on a dataset specific to the task (see Fine-tuning), is a crucial technique to address this limitation [1].

Although fine-tuning adapts the model more effectively to specific tasks, pre-trained models often contain a large number of parameters, which reduces the efficiency of fine-tuning, particularly in terms of inference speed. To address this challenge, parameter-efficient fine-tuning (PEFT) [2] is employed. PEFT preserves the overall model architecture while updating only a small subset of parameters, thereby reducing computational overhead and improving both training efficiency and inference performance.

To this end, PEFT freezes the majority of the pre-trained parameters and layers, introducing only a small number of trainable parameters, known as adapters, into the final layers of the model for the task at hand. This approach allows fine-tuned models to retain the knowledge acquired during pre-training while efficiently specializing in their respective downstream tasks [2].

Low-rank Adaptation

Low-rank adaptation (LoRA) is an efficient PEFT technique that leverages

low-rank decomposition to reduce the number of trainable parameters.

Figure 1 shows the overall workflow of LoRA wherein LoRA freezes the

high-dimensional pre-trained weight matrix and decomposes that into two

lower-rank matrices, A and B. As a result,

rather than the high-dimensional pre-trained weight matrix, the two

low-rank matrices are updated during fine-tuning to capture task-specific

adaptations. After training, these matrices are merged with the original

weights to form an updated parameter matrix. This results in efficient

training without modifying most of the pre-trained parameters [3].

Implementation

In this section, we fine-tune the GPT-2 model. The complete implementation script is available on PEFT_LoRA.

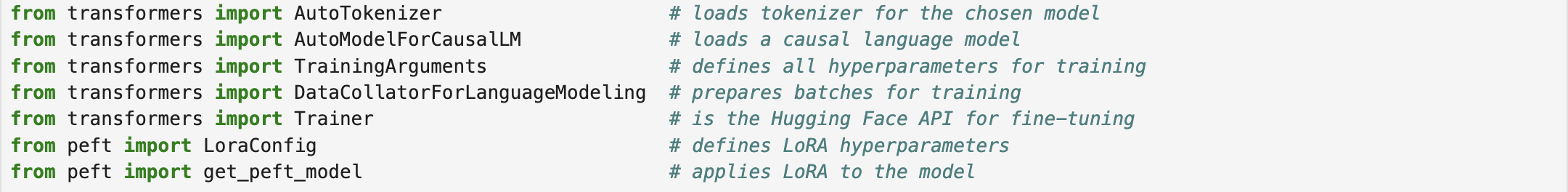

The fundamental components in fine-tuning a model using Hugging Face application programming interface (API) [4] are tokenizer, foundation model, training arguments, (hyperparameters), and data collator, and trainer API. We also need to import PEFT-related libraries into the program.

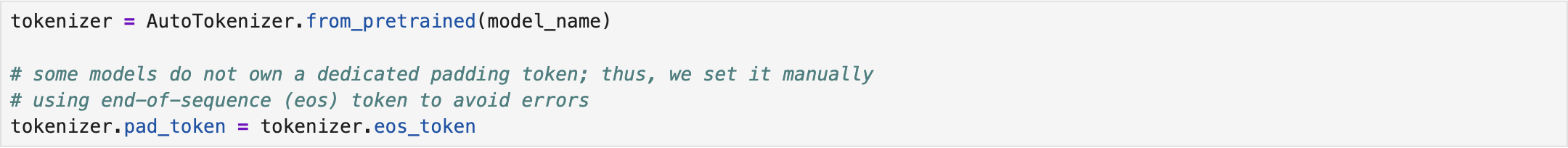

Tokenizer is responsible for tokenizing the inputs into tokens and encoding them to the corresponding token IDs. Each foundation model has its own tokenizer, developed based on the pre-defined vocabulary (or dictionary) for the model. For loading both tokenizer and model, we define a checkpoint w.r.t. the foundation model we are going to exploit for fine-tuning. Here, we have model_name = "gpt2".

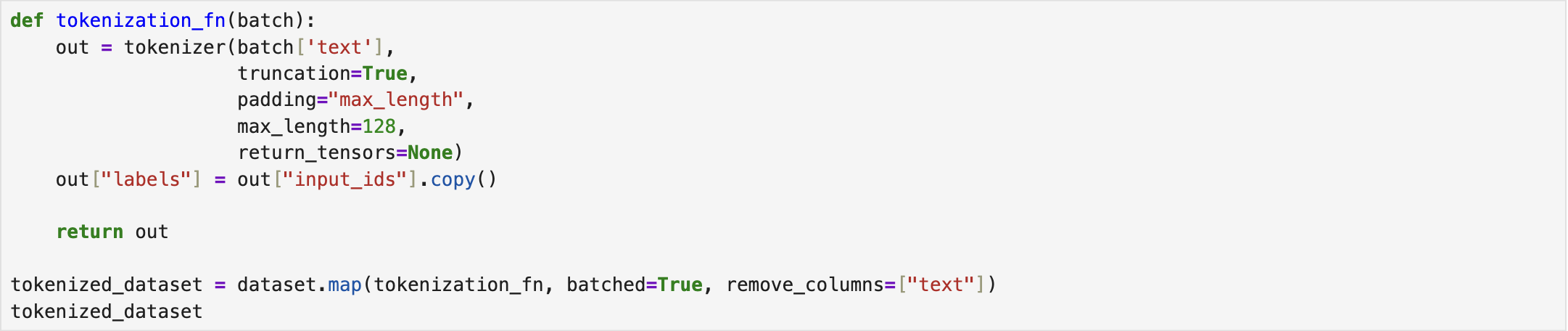

Truncation and padding are essential configurations that must be specified for the tokenizer. To achieve this, a dedicated function (e.g., tokenization_fn) is typically defined to set these parameters accordingly. Within this function, the max_length parameter plays a key role, as it determines the sequence length used for both truncation and padding.

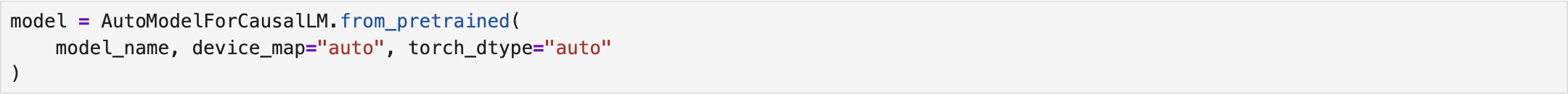

Next, we need to load the model from the pre-defined checkpoint. After loading the model, we define the LoRA configuration and modify the model accordingly.

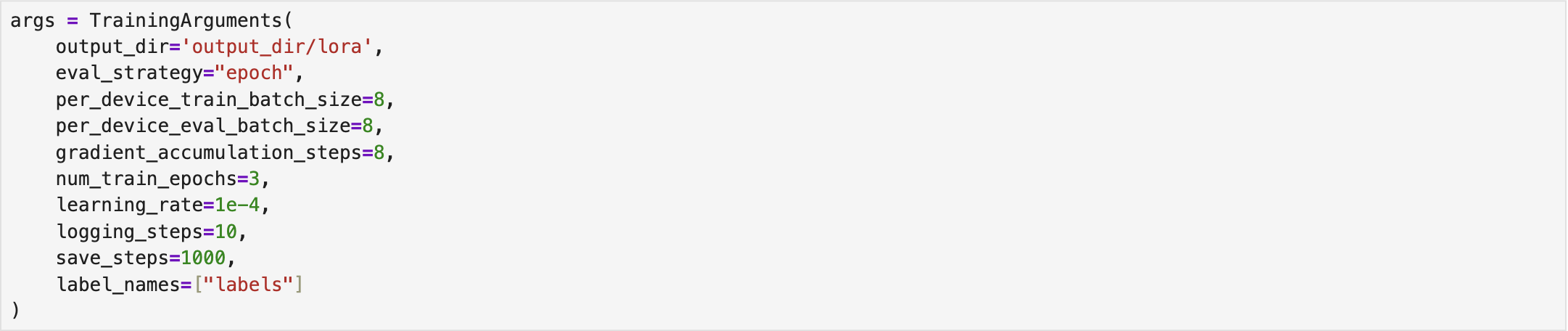

For fine-tuning the loaded model, training arguments must be properly defined. In this regard, we have

-

output_dir: the directory where checkpoints are saved.

-

eval_strategy: the evaluation strategy.

-

per_device_train/eval_batch_size: keeps virtual random access memory (VRAM) usage low.

-

gradient_accumulation_steps: simulates larger effective batch size without increasing VRAM.

-

num_train_epochs: number of passes over the dataset for fine-tuning.

-

learning_rate: learning rate.

-

logging_steps: number of steps for logging loss.

-

save_steps: number of steps to save checkpoints.

-

label_names: labels in the dataset.

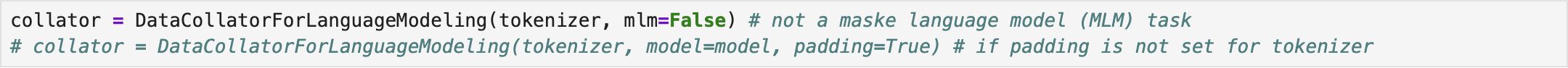

The data collator handles padding and batching. It takes a list of individual data samples and organizes them into a single and consistent batch using padding, creating attention masks, and handling special tokens.

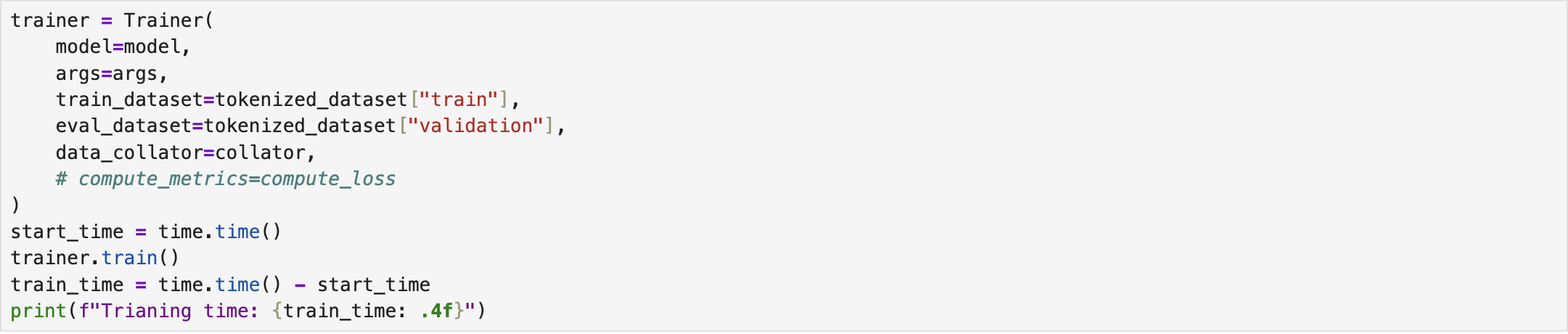

Finally, we define the trainer and perform the fine-tuning. We also record the training time.

Evaluation

To evaluate the performance of the fine-tuned model, we exploit perplexity, bilingual evaluation understudy (BLEU) [5], and recall-oriented understudy for gisting evaluation (ROUGE) [6].

-

Perplexity: it measures the model's uncertainty; lower perplexity implies that the model assigns higher probability to the actual next word in the sequence, resulting a more confident and accurate model.

-

BLEU: this metric evaluates the quality of machine-translated text by comparing it to human-created reference translations. To this end, it computes the overlap of n-grams between the machine-translated text and the reference translation.

-

ROUGE: it calculates precision, recall, and F1 score to quantify the overlap (n-grams) in words, phrases, and sequences between the machine-translated text and the reference translation.

Table I indicates the corresponding results. It is worth noting that achieving a highly efficient model requires fine-tuning on an appropriately selected dataset with a sufficient number of samples. However, the objective of this project is limited to reviewing the fine-tuning mechanisms in LLMs. Consequently, the resulting model performance may not be fully optimized.

| PPL | BLEU | ROUGE | Time (s) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| bleu | unigrams | bigrams | trigrams | quadgrams | rouge1 | rouge2 | rougeL | rougeLSum | ||

| 65.4614 | 0.6626 | 0.6663 | 0.6638 | 0.6612 | 0.6591 | 0.7467 | 0.6738 | 0.7466 | 0.7473 | 83.3375 |

References

[1] M. R. J, K. VM, H. Warrier, and Y. Gupta, “Fine tuning llm for enterprise: Practical guidelines and recommendations,” 2024, https://arxiv.org/abs/2404.10779.

[2] C. Stryker and I. Belcic, “What is parameter-efficient fine-tuning (peft)?” International Business Machines (IBM), accessed: Aug. 15, 2024, https://doi.org/10.3115/1073083.1073135.

[3] E. J. Hu, Y. Shen, P. Wallis, Z. Allen-Zhu, Y. Li, S. Wang, L. Wang, and W. Chen, “Lora: Low-rank adaptation of large language models,” 2021, https://arxiv.org/abs/2106.09685.

[4] Hugging Face, http://huggingface.co/

[5] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: a method for automatic evaluation of machine translation,” in Proceedings of the 40th Annual Meeting on Association for Computational Linguistics. USA: Association for Computational Linguistics, 2002, p. 311–318, https://doi.org/10.3115/1073083.1073135.

[6] C.-Y. Lin, “Rouge: A package for automatic evaluation of summaries,” in Annual Meeting of the Association for Computational Linguistics, 2004, https://api.semanticscholar.org/CorpusID:964287.

IEEE

IEEE Web of Science

Web of Science