Direct Preference Optimization

Language models (LMs) capable of learning a broad spectrum of knowledge are

typically trained unsupervised. However, the unsupervised nature of their

training data makes precise control over their behavior challenging. Reinforcement

Learning from Human Feedback (RLHF), which is a proximal policy optimization

(PPO)-based technique, has emerged as a promising approach

to address this limitation. However, RLHF is complex because it requires

first training a reward model to capture human preferences and then

fine-tuning the unsupervised LM using reinforcement learning to maximize the

estimated reward (see Fig. 1) [1].

In contrast, direct preference optimization (DPO) simplifies this process

by bypassing the need for a separate reward model. Instead, it directly

optimizes the LM using a loss function that encourages the generation of

preferred responses over dispreferred ones, based on a dataset of human

preferences. Figure 2 illustrates the overall DPO workflow [2].

Implementation

In this section, we fine-tune the GPT-2 model using DPO. The complete implementation script is available on DPO.

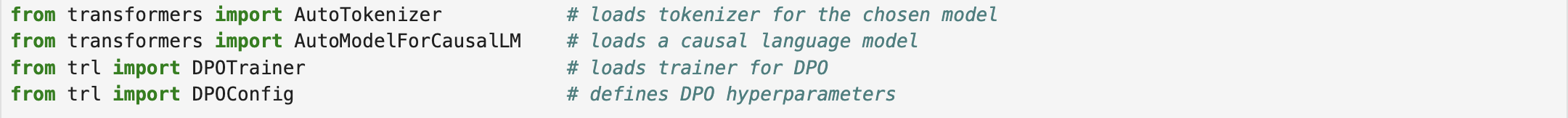

The fundamental components in fine-tuning a model using Hugging Face application programming interface (API) [3] are tokenizer, foundation model, training arguments (hyperparameters), and trainer API with the latter two configured based on the DPO framework.

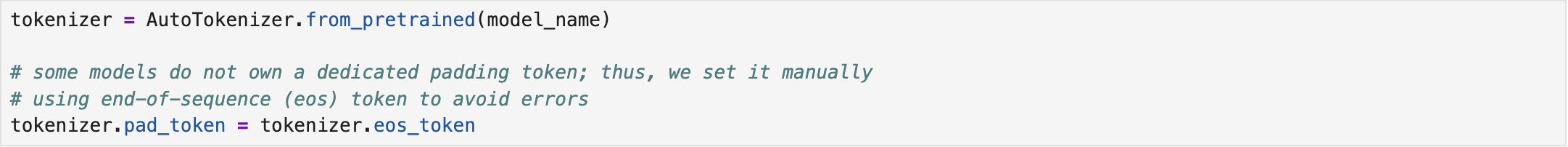

Tokenizer is responsible for tokenizing the inputs into tokens and encoding them to the corresponding token IDs. Each foundation model has its own tokenizer, developed based on the pre-defined vocabulary (or dictionary) for the model. For loading both tokenizer and model, we define a checkpoint w.r.t. the foundation model we are going to exploit for fine-tuning. Here, we have model_name = "gpt2".

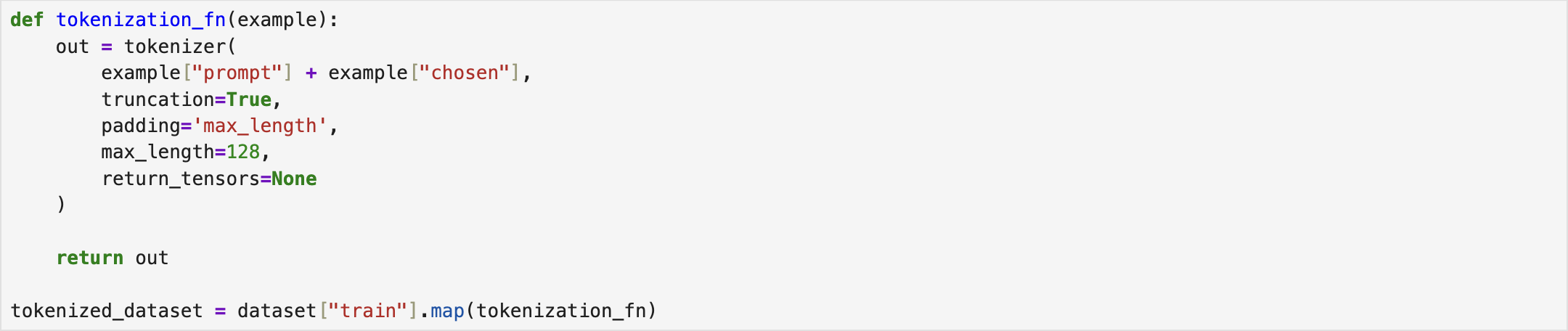

Truncation and padding are essential configurations that must be specified for the tokenizer. To achieve this, a dedicated function (e.g., tokenization_fn) is typically defined to set these parameters accordingly. Within this function, the max_length parameter plays a key role, as it determines the sequence length used for both truncation and padding.

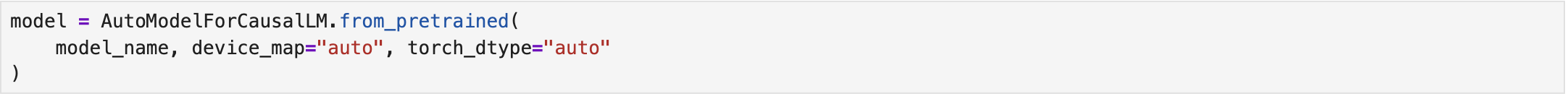

Next, we need to load the model from the pre-defined checkpoint.

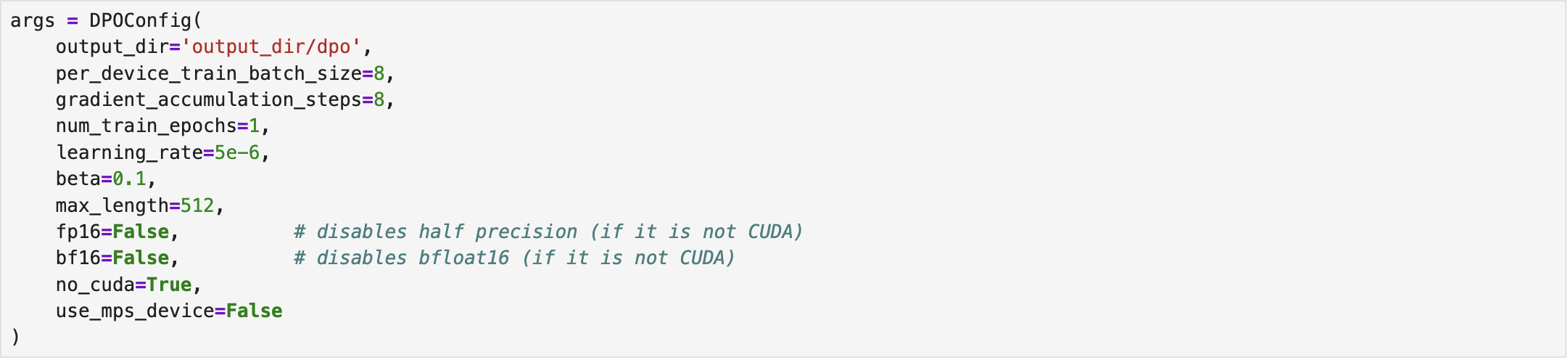

After loading the model, we define the DPO configuration that includes training arguments as

-

output_dir: the directory where checkpoints are saved.

-

eval_strategy: the evaluation strategy.

-

per_device_train/eval_batch_size: keeps virtual random access memory (VRAM) usage low.

-

gradient_accumulation_steps: simulates larger effective batch size without increasing VRAM.

-

num_train_epochs: number of passes over the dataset for fine-tuning.

-

learning_rate: learning rate.

-

beta: preference sharpness; higher value, stronger preference.

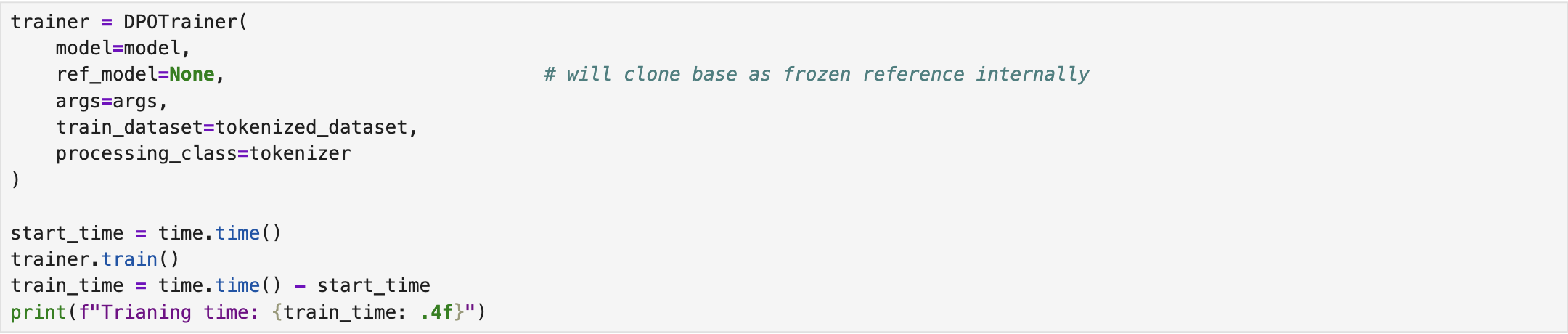

Finally, we define the trainer and perform the fine-tuning. We also record the training time.

Evaluation

To evaluate the performance of the fine-tuned model, we exploit preference accuracy, i.e., the accuracy of predicting the preferred responses by humans. Table I indicates the corresponding results. It is worth noting that achieving a highly efficient model requires fine-tuning on an appropriately selected dataset with a sufficient number of samples. However, the objective of this project is limited to reviewing the fine-tuning mechanisms in LLMs. Consequently, the resulting model performance may not be fully optimized.

| Preference Accuracy (%) | Time (s) |

|---|---|

| 45.80 | 826.8992 |

References

[1] L. Ouyang, J. Wu, X. Jiang, D. Almeida, C. L. Wainwright, and P. Mishkin et al., “Training language models to follow instructions with human feedback,” 2022, https://arxiv.org/abs/2203.02155.

[2] R. Rafailov, A. Sharma, E. Mitchell, S. Ermon, C. D. Manning, and C. Finn, “Direct preference optimization: Your language model is secretly a reward model,” 2024, https://arxiv.org/abs/2305.18290.

[3] Hugging Face, http://huggingface.co/

IEEE

IEEE Web of Science

Web of Science